[ad_1]

Phi-1, a compact but highly effective mannequin particularly designed for code-generation duties. In contrast to its predecessors, Phi-1 demonstrates superior efficiency in coding and different associated duties whereas utilizing considerably fewer parameters and a smaller coaching dataset.

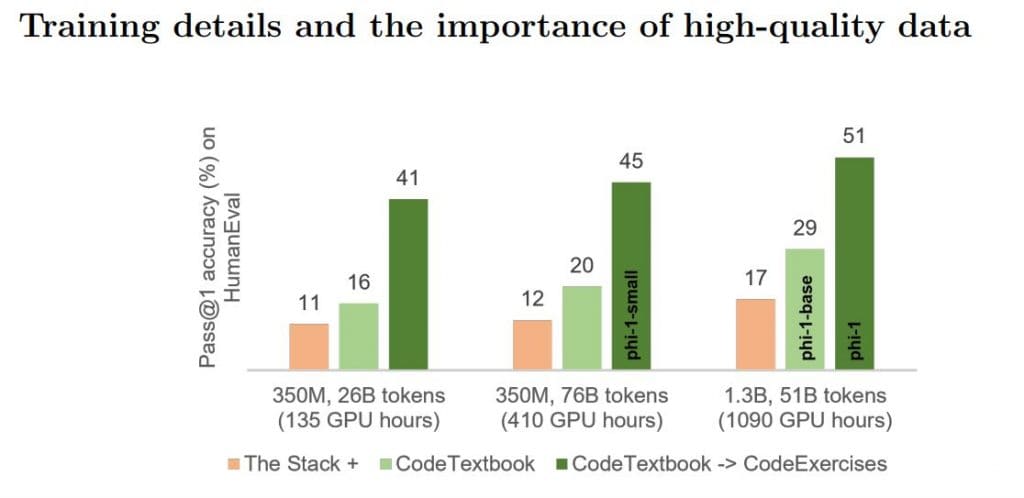

Phi-1, a Transformer-based mannequin, stands out with its mere 1.3 billion parameters, which is just a fraction of the scale of different competing fashions. Remarkably, it was educated in simply 4 days utilizing eight A100s. The coaching course of concerned fastidiously curated “textbook high quality” information sourced from the online (6 billion tokens) and artificial textbooks and workout routines generated with the help of GPT-3.5 (1 billion tokens).

Regardless of its smaller scale, Phi-1 achieves spectacular outcomes, boasting a [email protected] accuracy of fifty.6% on HumanEval and 55.5% on MBPP benchmarks. Furthermore, it displays surprising emergent properties when in comparison with Phi-1-base, an earlier mannequin earlier than finetuning, and Phi-1-small, a smaller mannequin with 350 million parameters. Even with its decreased measurement, Phi-1 nonetheless achieves a commendable 45% accuracy on HumanEval.

The success of Phi-1 may be attributed to the high-quality information used throughout coaching. Simply as a complete and well-crafted textbook aids college students in mastering new topics, the researchers targeted on crafting “textbook high quality” information to boost the educational effectivity of the language mannequin. This method resulted in a mannequin that surpasses most open-source fashions on coding benchmarks like HumanEval and MBPP, regardless of its smaller mannequin measurement and dataset quantity.

Nevertheless, it is very important word some limitations of Phi-1 in comparison with bigger fashions. Firstly, Phi-1 focuses on Python coding and lacks the flexibility of multi-language fashions. Moreover, it lacks domain-specific data present in bigger fashions, reminiscent of programming with particular APIs or utilizing much less widespread packages. Lastly, as a result of structured nature of the datasets and the shortage of variety in language and magnificence, Phi-1 is much less strong to stylistic variations or errors within the immediate.

The researchers acknowledge these limitations and consider that additional work can handle every of them. They suggest utilizing GPT-4 to generate artificial information as an alternative of GPT-3.5, as they noticed a excessive error price within the latter’s information. Regardless of the errors, Phi-1 demonstrates exceptional coding proficiency, just like a earlier research the place a language mannequin produced right solutions even when educated on information with a 100% error price.

Learn extra about AI:

[ad_2]

Source link