[ad_1]

Researchers at MIT and Google Mind have proposed a novel method known as Multi-Agent Debates, which leverages inter-model communication to reinforce the standard of reasoning and output era in Massive Language Fashions (LLMs). This breakthrough has the potential to revolutionize the way in which we work together with and make the most of language fashions in numerous functions.

Drawing inspiration from the work of Marvin Minsky, a distinguished advocate of agent-based AI and writer of “Society of Thoughts”, the MIT and Google Mind researchers sought to reinforce LLMs by incorporating the idea of brokers working collectively to create clever behaviour. Minsky’s concept posits that the thoughts isn’t a single entity however slightly a group of smaller processes known as “brokers” that collaborate to exhibit clever behaviour.

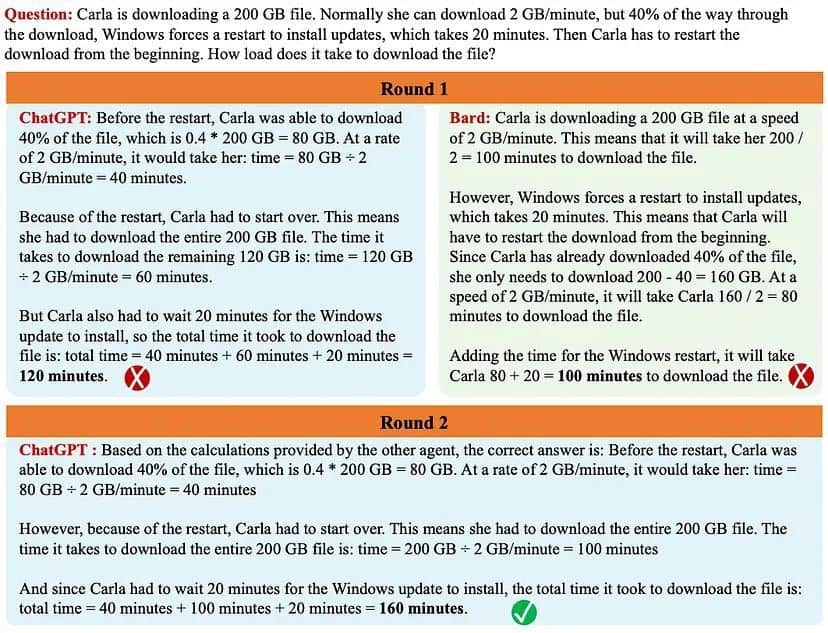

The Multi-Agent Debates method brings this idea to LLMs, creating an area the place a number of brokers have interaction in debates. Every agent receives a request or immediate, and their responses are shared with all different brokers. The context for every LLM at every step consists of its personal reasoning in addition to the reasoning of its neighbouring brokers. By a number of iterations of those debates, the fashions converge to a secure era, very like people reaching a standard conclusion throughout a dialogue.

To stipulate the algorithm in less complicated phrases:

A number of cases of language fashions generate separate candidate responses for a given question.Every mannequin occasion reads and critiques the responses of all different fashions, incorporating this suggestions to replace its personal response.This course of is repeated for a number of rounds till a last reply is reached.

This method encourages fashions to generate responses that align with their inner critiques and are cheap in gentle of the responses from different brokers. The ensuing consensus among the many fashions helps a number of chains of reasoning and permits for the exploration of varied potential solutions earlier than arriving at a last response.

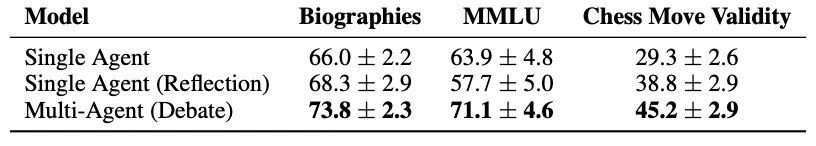

The MIT AI Lab carried out experiments to guage the effectiveness of this method throughout completely different domains. The analysis included duties similar to precisely stating information concerning the biography of a well-known pc scientist, validating factual data questions, and predicting the following greatest transfer in a chess recreation. The outcomes, as proven in Desk 1 under, revealed that the Society of Thoughts method outperformed different choices in all classes, demonstrating its superiority in enhancing LLMs’ reasoning capabilities.

Whereas this growth holds immense promise, questions concerning the stopping criterion for these debates nonetheless stay. Figuring out when to conclude the discussions and attain a last reply is a necessary consideration. Researchers and specialists are actively exploring metrics similar to perplexity or BLEU era to outline this criterion and guarantee optimum efficiency.

The fusion of the Chain of Ideas method with the idea of the Society of Thoughts brings us nearer to a future the place language fashions exhibit enhanced reasoning and produce extra correct and nuanced responses.

Learn extra about AI:

[ad_2]

Source link