[ad_1]

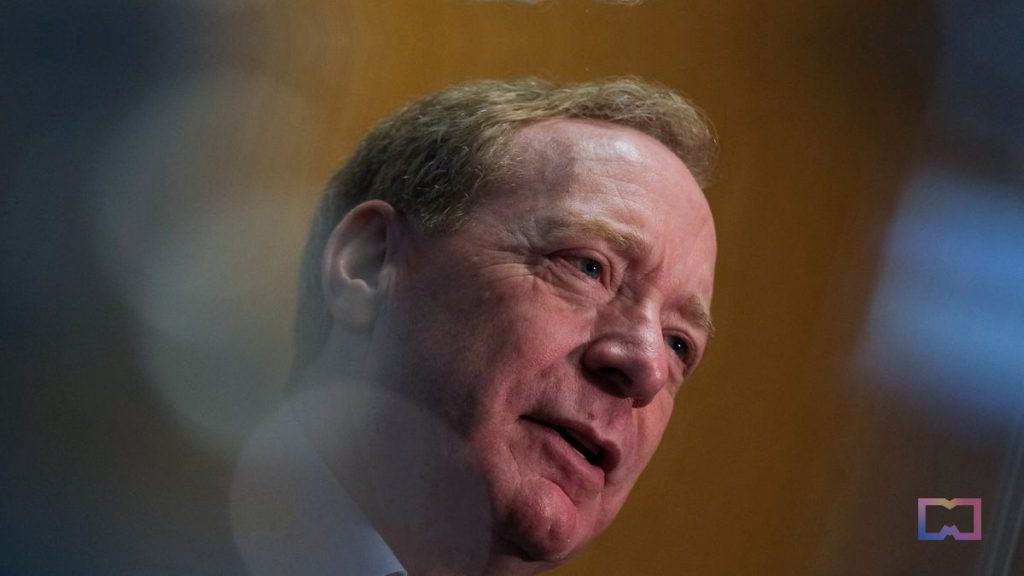

Microsoft’s president Brad Smith has raised considerations relating to synthetic intelligence, emphasizing the potential hurt brought on by deepfakes—misleading content material that seems real however is definitely fabricated. Throughout a speech in Washington on the regulation of AI, Smith underlined the important necessity for mechanisms that assist folks differentiate between real content material and AI-generated materials, particularly when there’s a potential for malicious intent.

“We’re going have to deal with the problems round deep fakes. We’re going to have to deal with specifically what we fear about most overseas cyber affect operations, the sorts of actions which can be already going down by the Russian authorities, the Chinese language, the Iranians,”

he stated.

In line with Reuters, Smith has advocated for licensing for essentially the most essential AI varieties “with obligations to guard the safety, bodily safety, cybersecurity, nationwide safety.” He additionally said {that a} new or up to date technology of export controls is critical to forestall the theft or misuse of those fashions in ways in which would violate the nation’s export management rules.

Throughout his speech, Smith argued that people ought to be held chargeable for any points arising from utilizing AI. He known as upon lawmakers to implement security measures to take care of human management over AI techniques that govern important infrastructure resembling the electrical grid and water provide. Smith additionally advocated for establishing a system much like “Know Your Buyer,” the place builders of highly effective AI fashions are required to observe the utilization of their expertise and supply transparency to the general public relating to AI-generated content material, enabling them to determine manipulated movies.

AI regulation is at the moment a world debate. Not too long ago, OpenAI’s CEO Sam Altman testified earlier than the U.S. Senate, emphasizing the significance of AI regulation. Altman expressed his firm’s willingness to help policymakers find a steadiness between selling security and making certain that individuals can profit from AI expertise. Nevertheless, Altman doesn’t assist the EU AI Act and has said that OpenAI could go away Europe if the rule requiring the disclosure of copyrighted materials utilized in AI system growth is enforced.

Learn extra:

[ad_2]

Source link