[ad_1]

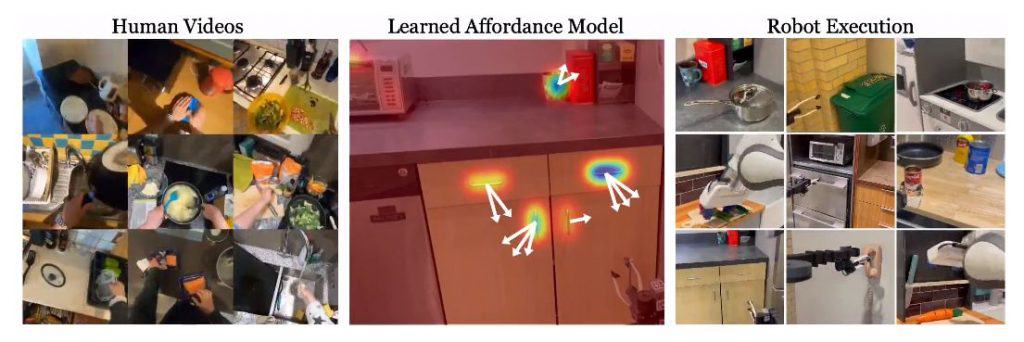

Meta AI unveiled a brand new algorithm that allows robots to be taught and replicate human actions by watching YouTube movies. In a current paper entitled “Affordances from Human Movies as a Versatile Illustration for Robotics,” the authors discover how movies of human interactions could be leveraged to coach robots to carry out advanced duties.

This analysis goals to bridge the hole between static datasets and real-world robotic functions. Whereas earlier fashions have proven success on static datasets, making use of these fashions on to robots has remained a problem. The researchers suggest coaching a visible affordance mannequin utilizing web movies of human conduct could possibly be an answer. This mannequin estimates the place and the way a human is more likely to work together in a scene, offering useful data for robots.

The idea of “affordances” is central to this method. Affordances consult with the potential actions or interactions an object or setting provides. By understanding affordances via human movies, the robotic beneficial properties a flexible illustration that allows it to carry out numerous advanced duties. The researchers combine their affordance mannequin with 4 totally different robotic studying paradigms: offline imitation studying, exploration, goal-conditioned studying, and motion parameterization for reinforcement studying.

To extract affordances, the researchers make the most of large-scale human video datasets like Ego4D and Epic Kitchens. They make use of off-the-shelf hand-object interplay detectors to establish the contact area and monitor the wrist’s trajectory after contact. Nonetheless, an essential problem arises when the human remains to be current within the scene, inflicting a distribution shift. To handle this, the researchers use accessible digicam data to undertaking the contact factors and post-contact trajectory to a human-agnostic body, which serves as enter to their mannequin.

Beforehand, robots had been able to mimicking actions, however their skills had been restricted to replicating particular environments. With the newest algorithm, researchers have made important progress in “generalizing” robotic actions. Robots can now apply their acquired information in new and unfamiliar environments. This achievement aligns with the imaginative and prescient of attaining Synthetic Basic Intelligence (AGI) as advocated by AI researcher Jan LeCun.

Meta AI is dedicated to advancing the sphere of laptop imaginative and prescient and is planning to share its undertaking’s code and dataset. This can allow different researchers and builders to additional discover and construct upon this know-how. With elevated entry to the code and dataset, the event of self-learning robots able to buying new expertise from YouTube movies will proceed to progress.

By leveraging the huge quantity of on-line educational movies, robots can turn into extra versatile and adaptable in numerous environments.

Learn extra about AI:

[ad_2]

Source link