[ad_1]

Deep studying firm Deci unveiled DeciCoder a brand new generative AI primarily based basis mannequin that may produce code in numerous programming languages. In line with the corporate, the mannequin has 1 billion parameters and a big context window of 2048 tokens, which permits it to generate high-quality and numerous code snippets.

Yonatan Geifman, CEO and co-founder of Deci, informed Metaverse Submit that mannequin inference price is a serious concern for generative AI purposes resembling code era. The excessive price is principally as a consequence of these mannequin’s dimension, computational necessities, and reminiscence depth of the underlying massive language fashions (LLMs). Because of this, fast era necessitates costly high-end {hardware}.

“An answer to counteract these exorbitant prices and cut back inference expenditure by 4x is to develop extra environment friendly fashions,” Geifman informed Metaverse Submit. “These fashions must be able to fast inference on extra inexpensive {hardware} with out sacrificing accuracy. That’s precisely what DeciCoder does, and it stands out on this regard.”

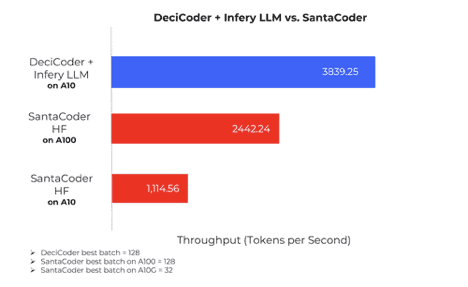

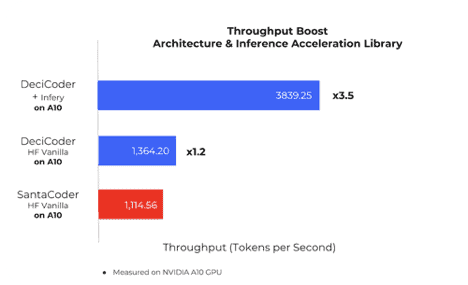

The corporate stated that when operating on NVIDIA’s A10G, a cheaper {hardware}, DeciCoder’s inference pace surpasses that of SantaCoder, the preferred mannequin within the 1-billion parameter vary, operating on the pricier NVIDIA’s A100. Furthermore, DeciCoder on the A10 is 3.5 instances sooner than SantaCoder on the A10 and 1.6 instances sooner than SantaCoder on the A100.

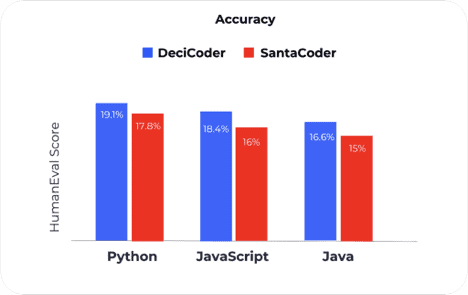

Geifman asserts that DeciCoder additionally delivers excellent accuracy. The mannequin outperforms SantaCoder in accuracy throughout all three programming languages they had been each skilled on: Python, JavaScript, and Java.

He stated that the generative mannequin delivers considerably decrease inference prices when used with Deci’s Infery software: a 71.4% discount in price per 1,000 tokens in comparison with SantaCoder’s efficiency on the HuggingFace Inference Endpoint.

“DeciCoder reduces computational prices throughout inference by permitting companies emigrate their code era workloads to cheaper {hardware} with out sacrificing pace or accuracy or, alternatively, generate extra code in much less GPU time,”

Geifman shared.

Moreover, at the side of Infery (Deci’s inference acceleration library) on an A10G GPU, DeciCoder reportedly aids in minimizing carbon footprint. The corporate asserts that it decreases annual carbon emissions by 324 kg CO2 per mannequin occasion in comparison with SantaCoder on similar {hardware}.

Advancing Code Technology with Spectacular Benchmarks

Geifman defined that two major technological distinctions are contributing to DeciCoder’s enhanced throughput and diminished reminiscence utilization: DeciCoder’s mannequin progressive structure and the utilization of Deci’s inference acceleration library.

“Deci’s structure was generated by its proprietary Neural Structure Search know-how, AutoNAC, which has generated a number of high-efficiency basis fashions in each pc imaginative and prescient and NLP,” he stated. “The intrinsic design of the mannequin structure endows DeciCoder with superior throughput and accuracy. Whereas DeciCoder, like SantaCoder and OpenAI’s GPT fashions, relies on the transformer structure, it diverges in its distinctive implementation of Grouped Question Consideration (GQA).”

GPT-3, SantaCoder, and Starcoder use Multi-Question Consideration over Multi-Head Consideration for enhanced effectivity, resulting in faster inference. Nevertheless, this effectivity comes at the price of diminished high quality and accuracy in comparison with Multi-Head Consideration.

Deci’s GQA strikes a superior stability between accuracy and effectivity than Multi-Question Consideration. It maintains related effectivity ranges whereas delivering considerably improved accuracy.

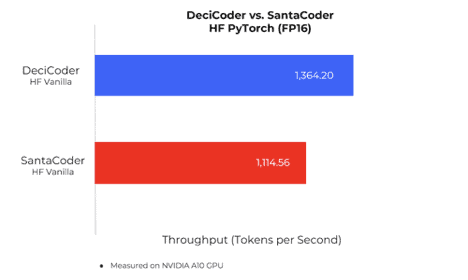

The distinction turns into extra evident when evaluating DeciCoder and SantaCoder, each deployed on HuggingFace Inference Endpoints. DeciCoder achieves a 22% increased throughput and demonstrates improved accuracy, as proven within the second chart and the next chart.

Deci stated that its LLM inference acceleration library, Infery, makes use of superior proprietary engineering methods developed by the corporate’s analysis and engineering crew to speed up inference.

The corporate claims that these end in an extra increase in throughput and may be utilized to any LLM, apart from Deci’s. Furthermore, the corporate stated that Infery is relatively simple to make use of, permitting builders to make use of advanced, extremely superior methods with just a few strains of code.

Using AutoNAC For Optimum Steadiness of Accuracy and Velocity

In line with Geifman, the search for the “optimum” neural community structure has traditionally been a labor-intensive handbook exploration. Whereas this handbook strategy typically yields outcomes, it’s extremely time-consuming and sometimes falls in need of pinpointing essentially the most environment friendly neural networks.

“The AI group acknowledged the promise of Neural Structure Search (NAS) as a possible game-changer, automating the event of superior neural networks. Nevertheless, the computational calls for of conventional NAS strategies restricted their accessibility to some organizations with huge sources,”

Geifman informed Metaverse Submit.

Deci claims that its “AutoNAC” function can ease NAS processes by providing a compute-efficient technique to provide NAS-generated algorithms, bridging the hole between potential and feasibility.

The corporate defined that AutoNAC is an algorithm that takes as enter particular dataset traits, a mannequin process, efficiency targets, and an inference setting and outputs an optimum neural community that delivers the most effective stability between accuracy and inference pace for the required necessities.

Along with object-detection fashions resembling Yolo-NAS, AutoNAC has already generated transformer-based fashions for NLP duties (DeciBert) and pc imaginative and prescient duties (NAS SegFormer).

The corporate introduced that rollout of DeciCoder is the primary in a collection of the extremely anticipated releases outlining Deci’s Generative AI providing, that are as a consequence of be launched within the coming weeks.

DeciCoder and its pre-trained weights at the moment are obtainable beneath the permissive Apache 2.0 License, granting builders broad utilization rights and positioning the mannequin for real-world, business purposes.

Learn extra:

[ad_2]

Source link