[ad_1]

Content material moderation has lengthy been considered one of many web’s most difficult points. Given the subjective nature of figuring out what content material ought to be permitted on a particular platform, even professionals discover it powerful to handle this course of successfully. Nevertheless, it seems that OpenAI, the creator of ChatGPT, believes it may well supply help on this space.

Content material moderation will get automated

For many years, content material moderation has been seen as one of the crucial troublesome issues on the web. The inherent subjectivity in deciding what content material ought to be allowed on a given platform makes this course of difficult to handle successfully, even for professionals.

Nevertheless, it seems that OpenAI, the creator of ChatGPT, believes it may well present an answer. OpenAI, a frontrunner in synthetic intelligence, is testing the content material moderation capabilities of its GPT-4 mannequin.

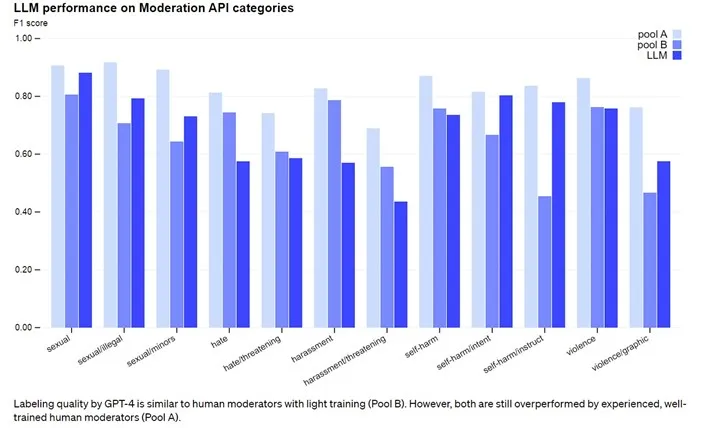

The corporate goals to leverage GPT-4 to create a scalable, constant, and customizable content material moderation system. The purpose is not only to help in making content material moderation choices but in addition to assist formulate insurance policies. Consequently, focused coverage modifications and the event of recent insurance policies might be expedited from taking months to mere hours.

The mannequin is alleged to be able to parsing numerous rules and nuances in content material insurance policies and might immediately adapt to any updates. OpenAI contends that this permits content material to be tagged extra persistently. Sooner or later, it might be potential for social media platforms like X, Fb, or Instagram to totally automate their content material management and administration processes.

Anybody with entry to OpenAI’s API can already make the most of this method to assemble their very own AI-powered moderation system. Nevertheless, OpenAI asserts that its GPT-4 audit instruments can assist firms accomplish roughly six months of labor in a single day.

Vital for human well being

Guide assessment of traumatic content material on social media, particularly, is thought to have important results on the psychological well being of human moderators. In 2020, as an example, Meta agreed to pay not less than $1,000 every to greater than 11,000 moderators for potential psychological well being points stemming from their assessment of content material posted on Fb. Utilizing synthetic intelligence to alleviate a few of the load on human moderators might be extremely helpful.

Nevertheless, AI fashions are removed from flawless. It’s well-known that these instruments are inclined to errors in judgment, and OpenAI acknowledges that human involvement continues to be essential.

Observe us on Twitter and Instagram and be immediately knowledgeable concerning the newest developments…

[ad_2]

Source link