[ad_1]

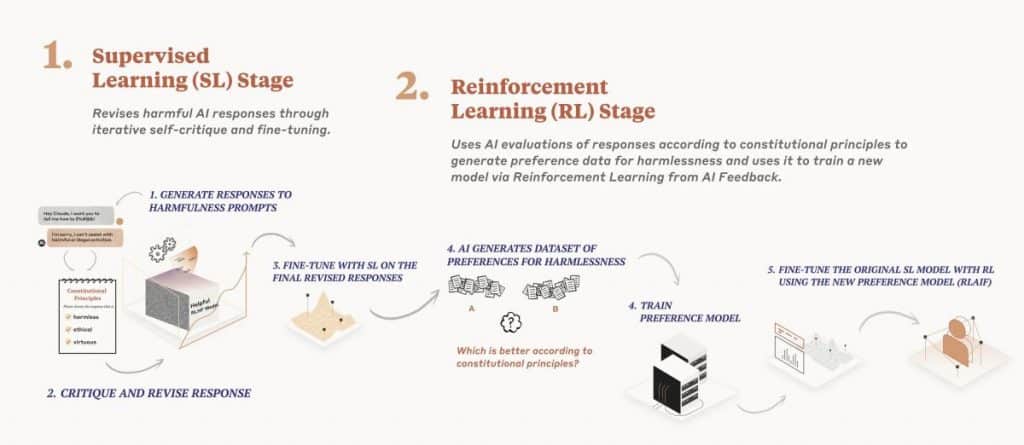

Anthropic has proposed a brand new method to coaching chat fashions utilizing ‘Constitutional AI’. This methodology builds on OpenAI’s reinforcement studying from human suggestions however builds upon it by avoiding the necessity to write deep coaching samples. As a substitute, the mannequin is educated to reply to enter by the usage of a structure which is supposed to behave as a set of legal guidelines for the mannequin to comply with.

By way of this methodology, the AI can generate its personal coaching samples by evaluating what it has mentioned versus its set of legally accepted rules. This time-saving method could be seen as Isaac Asimov‘s Legal guidelines of Robotics put into observe.

The rules which kind the bottom of the mannequin are too quite a few to debate intimately. Nevertheless, they cowl many subjects, akin to morality, danger aversion, economics, and synthetic intelligence. Every has been developed so as to assist information the AI’s choices in terms of responding to conversational prompts.

Anthropic has managed to successfully prepare an AI mannequin named Claude, which efficiently competes with OpenAI’s ChatGPT. Utilizing the Structure-AI methodology, Claude might reply to conversational prompts at a formidable stage of accuracy, however further enhancements are anticipated as Anthropic continues to construct on this game-changing expertise.

Certainly, this new method has the potential to save lots of money and time for firms that may now not have to assemble their very own coaching samples. Reasonably, this ‘ready-made’ methodology can be utilized as a foundation for creating custom-fit models- no programming data is required. Additionally it is essential to notice how this expertise additionally guarantees to extend security in terms of conversational bots. Making a set of legally accepted rules mitigates the danger of the AI going rogue.

Subsequently, Constituation AI not solely guarantees to make chat mannequin improvement simpler and faster, however it would additionally make it safer. A win-win state of affairs for the world of Synthetic Intelligence and ChatBots alike.

An Analytical Have a look at Anthropic’s “Contextual AI” for Chatbots

Anthropic’s Contextual AI is predicated on incorporating greater than 60 rules derived from the United Nations Declaration of Human Rights, Apple’s Phrases of Service, Rules Encouraging Consideration of Non-Western Views, Deepmind’s Sparrow Guidelines, and Anthropic Analysis Set 1 and Set 2.

The truth that AI can now be taught to behave in keeping with rules derived from such an expansive and various array of sources is really outstanding. By incorporating rules from the United Nations Declaration of Human Rights, for instance, chatbot responses now mirror the significance of preserving the notion of freedom, equality, and brotherhood. Such rules are an important part of making certain that chatbot conversations stay moral and respectful. Likewise, the incorporation of Apple’s Phrases of Service ensures the chatbot considers the privateness pursuits of its customers.

Rules Encouraging Consideration of Non-Western Views additionally play an essential function within the “Contextual AI” mannequin. These rules mirror the necessity for AI to be respectful of different cultures and be certain that chatbot responses usually are not perceived as being dangerous or offensive. Equally, Deepmind’s Sparrow Guidelines dictate that the chatbot responds with responses meant to construct a relationship with the person.

The incorporation of Anthropic Analysis Set 1 and Set 2 gives the ultimate assure that AI conversations stay civil and respectful. The AI is educated to make sure that it solutions questions in a considerate and courteous method.

All in all, Anthropic’s “Contextual AI” mannequin is an extremely essential breakthrough within the area of AI analysis. By permitting AI to be taught in keeping with rules derived from such a various vary of sources, the moral implications of automated conversations are drastically improved.

Learn extra about AI:

[ad_2]

Source link