[ad_1]

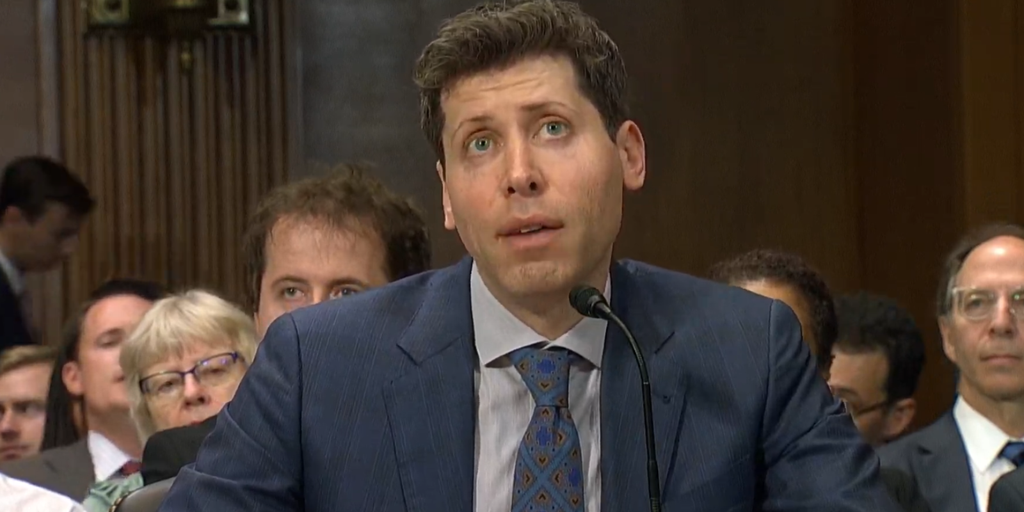

For the second time this month, OpenAI CEO Sam Altman went to Washington to debate synthetic intelligence with U.S. policymakers. Altman appeared earlier than the U.S. Senate Committee on the Judiciary alongside IBM’s Chief Privateness & Belief Officer Christina Montgomery and Gary Marcus, Professor Emeritus at New York College.

When Louisiana Senator John Kennedy requested how they need to regulate A.I., Altman mentioned a authorities workplace needs to be shaped and be put answerable for setting requirements.

“I might kind a brand new company that licenses any effort above a sure scale of capabilities, and that may take that license away and guarantee compliance with security requirements,” Altman mentioned, including that the would-be company ought to require impartial audits of any A.I. expertise.

“Not simply from the corporate or the company, however consultants who can say the mannequin is or is not in compliance with the state and security thresholds and these percentages of efficiency on query X or Y,” he mentioned.

Whereas Altman mentioned the federal government ought to regulate the expertise, he balked on the concept of main the company himself. “I like my present job,” he mentioned.

Utilizing the FDA for example, Professor Marcus mentioned there needs to be a security evaluate for synthetic intelligence that’s much like how medicine are reviewed earlier than being allowed to go to market.

“If you are going to introduce one thing to 100 million folks, someone has to have their eyeballs on it,” Professor Marcus added.

The company, he mentioned, needs to be nimble and in a position to observe what is going on on within the business. It could pre-review the tasks and in addition evaluate them after they’ve been launched to the world—with the authority to recall the tech if vital.

“It comes again to transparency and explainability in A.I.,” IBM’s Montgomery added. “We have to outline the very best threat utilization, [and] requiring issues like influence assessments and transparency, requiring firms to point out their work, and defending knowledge used to coach AI within the first place.”

Governments worldwide proceed to grapple with the proliferation of synthetic intelligence within the mainstream. In December, the European Union handed an AI act to advertise regulatory sandboxes established by public authorities to check AI earlier than its launch.

“To make sure a human-centric and moral improvement of synthetic intelligence in Europe, MEPs endorsed new transparency and risk-management guidelines for A.I. methods,” the European Parliament wrote.

In March, citing privateness considerations, Italy banned OpenAI’s ChatGPT. The ban was lifted in April after OpenAI made modifications to its privateness settings to permit customers to choose out of getting their knowledge used to coach the chatbot and to show off their chat historical past.

“To the query of whether or not we want an impartial company, I believe we do not need to decelerate regulation to deal with actual dangers proper now,” Montgomery continued, including that regulatory authorities exist already that may regulate of their respective domains.

She did acknowledge that these regulatory our bodies are under-resourced and lack the wanted powers.

“AI needs to be regulated on the level of threat, primarily,” Montgomery mentioned. “And that is the purpose at which expertise meets society.”

Keep on prime of crypto information, get day by day updates in your inbox.

[ad_2]

Source link